Network in Hyper-scale data centers – Facebook

This is a guest post by Suryanarayana M N V. Having led teams working on Networking protocols, Surya has in-depth knowledge of networking. He has keen interest in the areas of Network Virtualization and NFV.

A hyper-scale data center has tens of thousands of servers. How would the network be in such data centers? It need to be simple, scalable and fully automated. In this blog, I will share some insights into the network for Altoona DC of Facebook. This is referred to as data-center fabric. The main source of information is the Facebook blog on this topic.

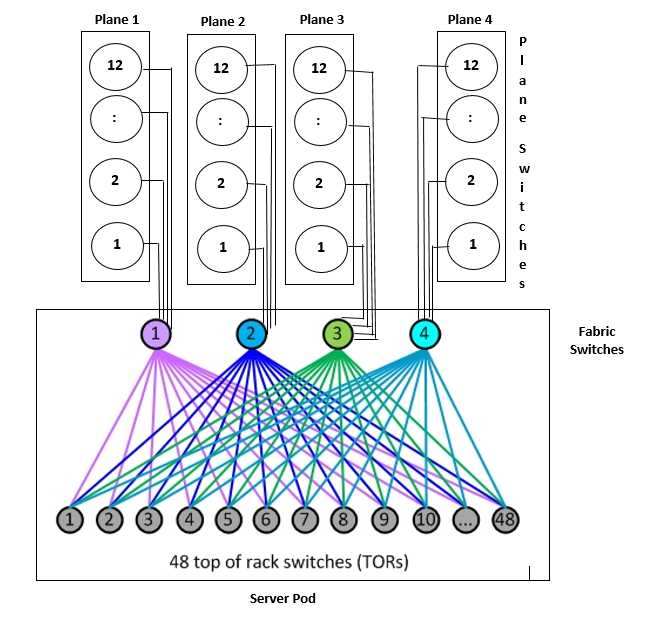

In the data center, the basic unit is referred to as server-pod. The network connections for one server-pod is illustrated in the below diagram:

Facebook Network

Let us examine in detail the scale & over-subscription in a server-pod:

- Each server-pod has 4 fabric-switches & upto 48 ToR switches.

- Each server has one 10G link to ToR switch.

- The ToR switch is connected to each of the fabric switches with a 40G link.

- A ToR switch can support 48 servers in the rack. The maximum number of servers per server-pod is 48 * 48 = 2304 servers.

- If there are 32 servers per rack, the maximum number of servers is 48 * 32 = 1536 servers

- Over-subscription for ToR switch:

- Uplinks: 4 X 40G = 160G

- Downlinks: 10G X 48 servers = 480G; 10G X 32 servers = 320G

- Over-subscription ratio: 3:1 (48 servers), 2:1 (32 servers)

How are these server-pods connected?

The connectivity between server-pods is through another set of switches referred to as plane-switches.

- There are four planes : Plane 1, Plane 2, Plane 3 & Plane 4

- Each Plane has 12 switches. By design, there can be upto 48 switches in one plane.

- Fabric Switch-1 is connected to all the 12 switches in Plane-1 by a 40G link.

- Similarly Fabric Switch-2/3/4 is connected to each of the 12 switches in Plane-2/3/4.

- Over-subscription ratio

- 4:1 – 12 switches per plane (48 40G links to TOR switches & 12 40G links to plane-switches)

- 1:1 – 48 switches per plane

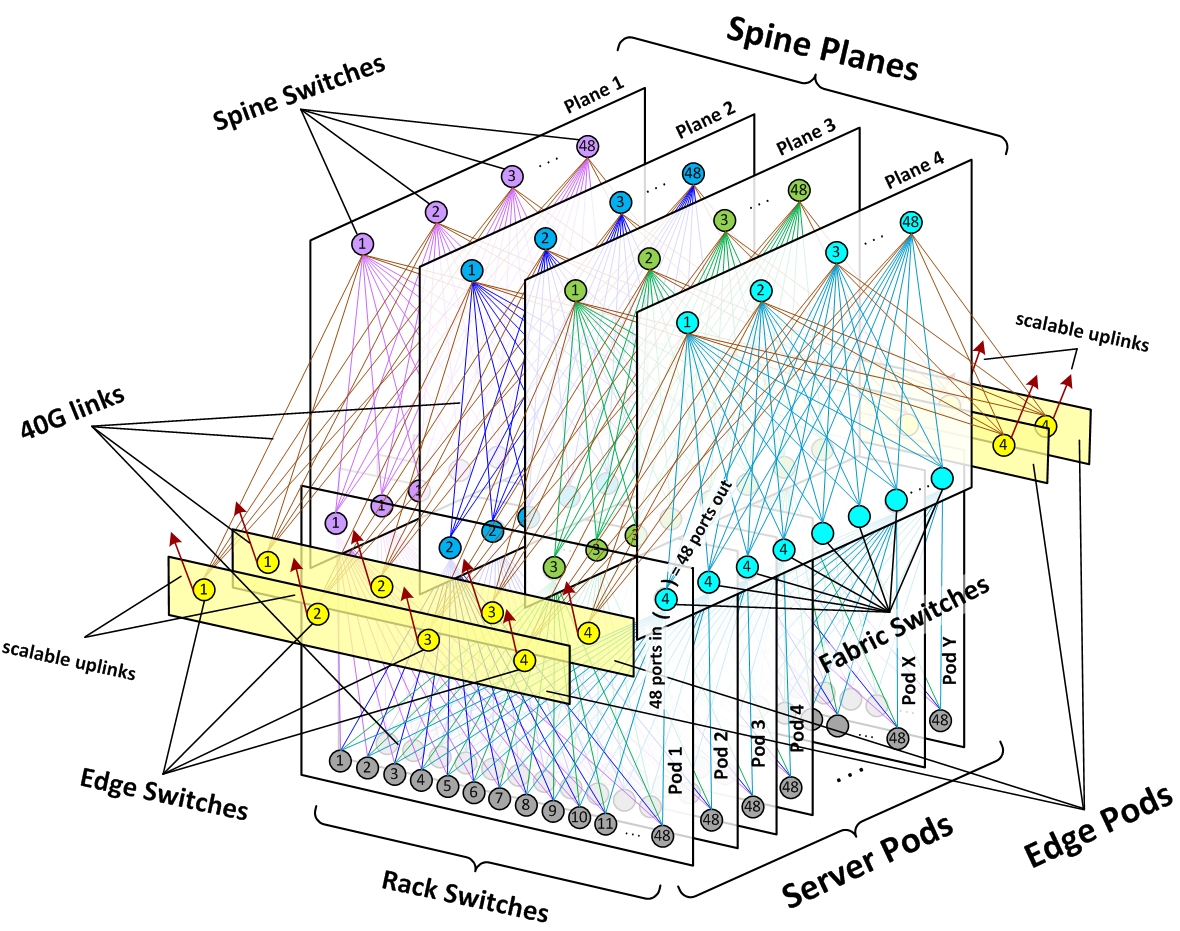

The 3D view of the network is depicted in this diagram:

Facebook Datacenter

For easier understanding, a 2D diagram is given in Jedelman’s blog.

How many servers can this network design support?

To support 50,000 servers, we would need 22 server-pods (48 servers per TOR) or 34 server-pods (32 servers per ToR)

What is the scalability of this network?

- To provide more bandwidth between fabric switches & ToR switches, more 40G links can be added per TOR switch (say 6 or 8). Another option is to add two more fabric switches (i.e., 6 fabric switches) and adding two more planes (i.e., plane-5 & plane-6).

- To provide more bandwidth between server-pods, the number of switches per plane can be increased upto 48 from the current 12 switches per plane.

What kind of switches do we need?

- ToR switch - These are standard Broadcom’s Trident or Trident-2 based switches i.e., 48X10G & 4X40G (or 6X40G).

- Fabric switch - This needs 60 40G links (48 40G towards ToR & 12 40G towards plane) and should be scalable to 96 40G links. These would be chassis based switches (i.e, modular).

- Plane switch - The number of 40G links required would be the number of server-pods & edge-pods that need to be supported. These would need atleast 32 40G links.

- The 6-pack switches announced by Facebook recently seem to fit well for the role of fabric & plane switches.

What problem did this network design solve?

Quoting FB’s Najeeb Ahmad:

“We were already buying the largest box you can buy in the industry, and we were still hurting for more ports ..”

“The architecture is such that you can continue to add pods until you run out of physical space or you run out of power. In Facebook’s case, the limiting factor is usually the amount of energy that’s available.”

With this design, the network elements are kept simple. If the network elements are simple, there would be multiple vendors offering such boxes and there would be option of using white-box switches.